Alexa Skills: technical overview of Alexa

Alexa Skills: technical overview. In this article I describe the technical architecture behind Alexa to make the skills work.

The following article is an excerpt from my book “Developing Alexa Skills“. Click on the picture to buy the book on Amazon from 8,99€. It’s well interesting for developers and those who want to become it.

Contents

Alexa Skills: technical overview of Alexa

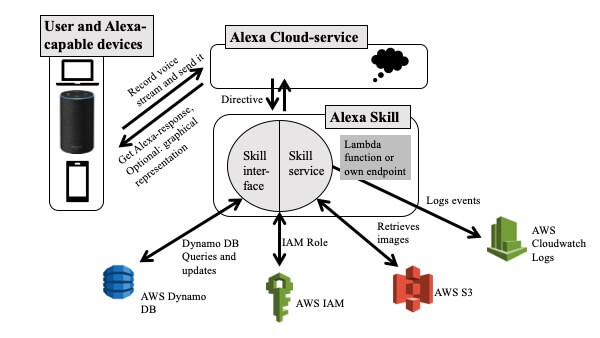

Here is a schematic overview of Alexa.

The Alexa Cloud service receives a request and responds with a response that represents an event for the skill at the time of execution.

Skill Interface and Skill Service

A Custom Alexa Skill consists of the Skill Service and the Skill Interface. Simply put, the frontend is the voice user interface (VUI), which is configured via the voice interaction model. The backend, the skill service, is the place where the logic of your skills lies and is executed.

Other skill types have the same structure, but it is provided by Amazon. Only Custom Skills allow you to define this yourself.

Skill Interface and interaction model

In the Skill Interface, the programmer can define a lot, depending on the skill type. The skill interface itself consists mainly of the interaction model. This is the file that contains all intents, utterances, slots and optional dialog types for multiple dialogs of an Alexa skill.

Thus it defines the logic of the skill and the language interface through which users interact with the skill. Interaction models are defined individually for each language in a JSON file that is stored in the models folder. For example, if a skill is available in German and English, the following files are stored in /models:

- en-US.json

- de-DE.json

The interaction model can also contain rules for prioritizing user requests. The interaction model is like a graphical user interface for Alexa. The interaction model JSON is divided into three parts:

languageModel

The languageModel contains all intents for the language application. Intents are individual requirements of a user in a dialog, for example if the user asks for a new question in a guessing game, wants to quit the program, or wants to go into another mode. Intents are similar to a method in a program, for example the following intents are created in custom skills by default:

- CancelIntent

- HelpIntent

- StopIntent

- NavigateHomeIntent

- Fallback Intentent

Each intent contains three fields, the intent field with the name, the slot field with one or more slots and under samples the so-called utterances.

Utterances

Under samples, one or more sample utterances can be mapped to this intent to start it. So users don’t always have to say the same sentence to trigger this intent. In addition, the users do not know the sentence, so there should be as wide a distribution of natural sounding sentences as possible. For example, not everyone says “Tell me…”, but also “Give me…”, “Find me…”, and so on.

Slots

are the optional arguments in an intent, e.g. names, quantities or data, and are defined by their data type. Slots are referenced with curly brackets and their respective names. Usually you give them meaningful names, for example {vacationPlace} for a skill for holiday planning.

Some slot types and intents of Amazon are already delivered, you can recognize them by the prefix AMAZON. Some of the already available slot types are for example LITERAL, NUMBER or DATE.

Other self-created (custom) slot types are stored in the languageModel under “types”, including all possible types for the slot type.

These custom slots help Alexa to increase the precision in the recognition of requests. For example, in a skill for subway timetables, the names of the stations could be used. However, Amazon already offers many slots that include geographic information. It is then always a good idea to use the Amazon slots. The slots from Amazon can also be extended with further entries, as shown in Chapter 5 – Frequently Asked Questions.

Also, languageModel contains the Invocation Name, which is the unique name with which Skill is called.

Dialog

After the languageModel follows the elements for the dialog. A dialog is a conversation between the user and Alexa in which Alexa asks questions and the user answers and is also called a session. The dialog is bound to an intent that represents the user’s general request. Alexa will continue a dialog until all slots specified by the dialog model are filled and confirmed.

Therefore, only intents where it is necessary to fill slots are listed in the dialog part. For example, intents without slots could be statements to Alexa. For intents with slot(s) it is then specified whether confirmation or elicitation is required. In addition, prompts for the respective intent are referenced here, which Alexa will then use concretely in this intent.

Prompts

Alexa’s prompts in a dialog are given in the third part of the JSON and have an id for referencing in the dialog model. Alexa can ask questions or give an answer. You can also specify several variants, i.e. different sentences, for the same variation prompt.

An example of an interaction model is the following file. It contains (for shortened representation) a standard intent (StopIntent), two user-defined intents, a dialog model and a user-defined slot type. The brackets are not closed line by line here, but are partially indented in the same line for clarity.

The Manual for Alexa Skill Development

If the article interested you, check out the manual for Alexa Skill Development on Amazon, or read the book’s page. On Github you can find all code examples used in the book.